Research

The Price Of Knowledge

Fideres investigates competition issues in academic journal markets.

The Fair Housing Act was enacted in 1968 to prohibit discrimination in mortgage lending. However, more than 50 years later, discrimination continues to pervade the US mortgage market. Academic literature shows that minority borrowers routinely experience higher interest rates and greater denial rates. A 2015 paper in the Journal of Real Estate and Finance Economics found that “black borrowers on average pay about 29 basis points more than comparable white borrowers.”1 A 2018 study by the Reveal Center for Investigative Reporting found that black/latinx borrowers were more likely to be denied mortgages across 61 metro areas in the US.2

Over the past decade, regulators and class action lawsuits have attempted to address discriminatory mortgage lending. In 2011/2012, the Department of Justice (DOJ) brought Fair Lending settlements against Wells Fargo and Countrywide for $175 m and $335 m respectively. These cases alleged that banks charged higher fees and interest rates to black/latinx borrowers and steered them into more expensive subprime mortgages even when borrowers qualified for better terms. Private litigants have also made multiple attempts to address this discrimination, but have consistently fallen short at class certification due to lack of commonality.3

In previous class action lawsuits, plaintiffs have alleged that banks had a “Discretionary Pricing Policy” which allowed loan officers to change the terms of a mortgage. They argued that this led to a disparate impact on minority borrowers when the loan officer held racial bias. However, in Walmart Stores, Inc. v. Dukes, the Supreme Court held that a discretionary policy was insufficient to establish a class action lawsuit.

The traditional theory of harm largely blames individual loan officers, but our research suggests this is incorrect. We have additionally identified discrimination stemming from centrally defined modus operandi – algorithms.

Our findings show entrenched discrimination in algorithms that goes beyond biased loan officers. These discriminatory algorithms are substantial and far under-appreciated sources of harm. They are also modes of harm common to all borrowers.

By changing the center of Fair Lending litigation to algorithms, we can directly address the commonality issues raised by Dukes. In previous cases, defendants have defeated cases by deflecting the blame onto ‘bad apple’ loan officers. However, defendants cannot blame individual loan officers for discrimination embedded in their algorithmic and centralized decision making processes.

The Home Mortgage Disclosure Act (HMDA) of 1975 requires financial institutions to share the details of mortgages they originate each year with the Federal Financial Institutions Examination Council. Using HMDA data, we can study the relationship between race and rate spreads controlling for various borrower and loan characteristics. It also contains an identification code for each loan originator, which we can use to identify fintech originated loans. This data does not, unfortunately, allow us to directly control for the credit score of the borrower.

The fintech lenders we identified are Quicken Loans, Amerisave Mortgage, Cashcall Inc, Guaranteed Rate Inc, Homeward Residential, and Movement Mortgage.4 Of these, not only is Quicken Loans the largest fintech lender, it is the largest mortgage lender in the U.S.5

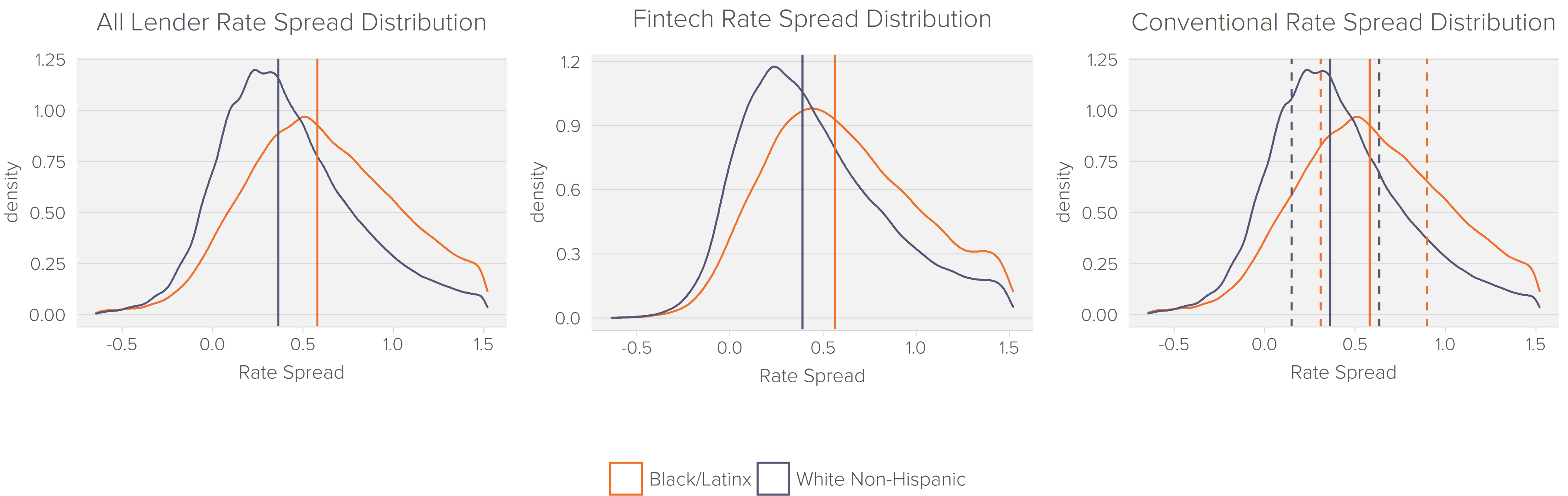

The 2018 HMDA data clearly shows that black/latinx borrowers experience higher interest and rejection rates. We examined rate spreads in the data. The rate spread is the difference between the Annual Percentage Rate (APR) on a specific loan and the Average Prime Offer Rates. By examining rate spreads over interest rates, we can directly assess the variation in rates due to borrower characteristics rather than loan terms or underlying LIBOR. There is a striking racial difference in rate spreads across all types of lenders.

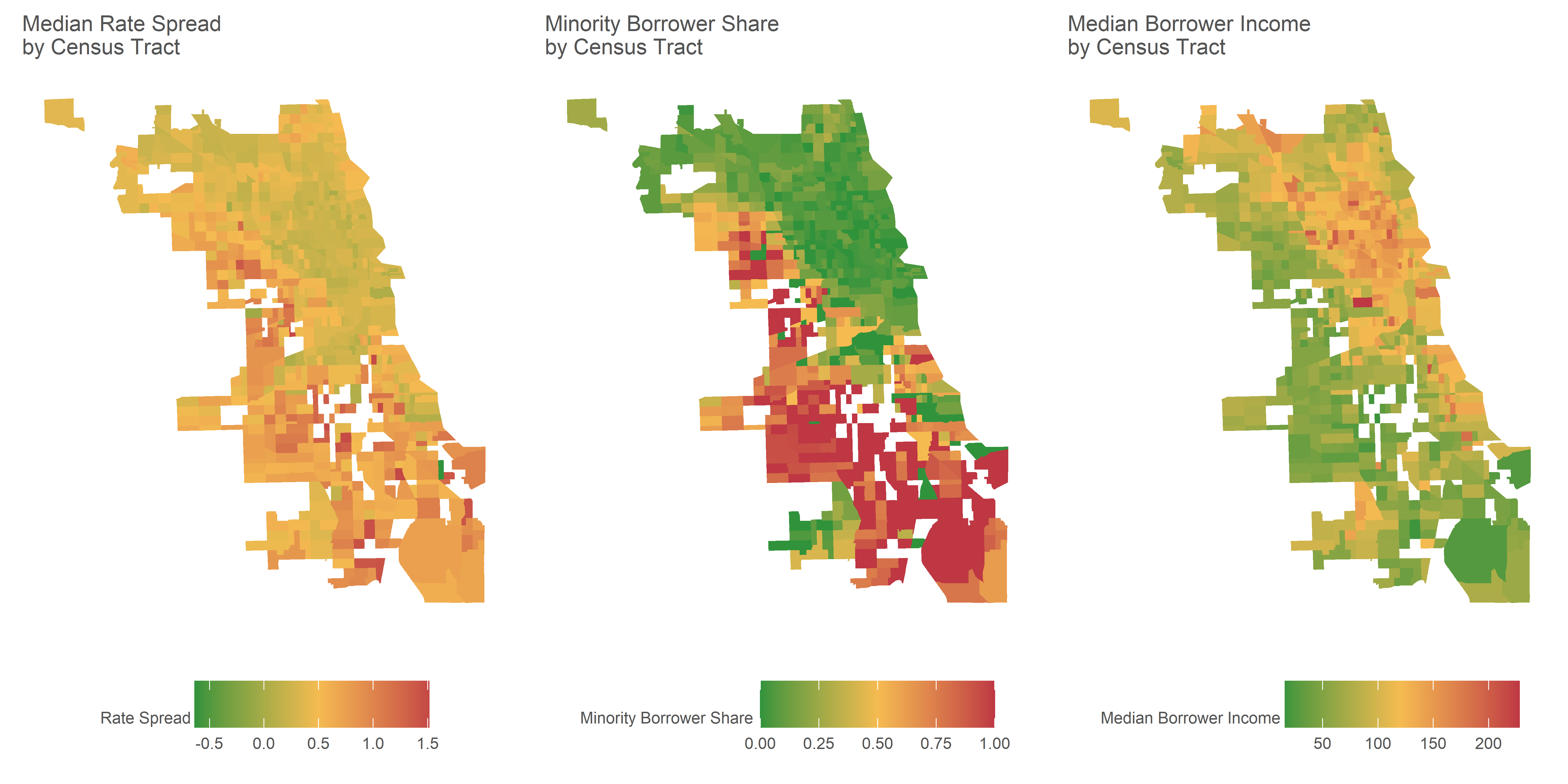

We can also visualize this data geographically. Within America’s largest cities, communities are segregated by race and interest rates. Using the 2018 HMDA data, we show the distribution of rate spreads, income, and ethnicity across census tracts in Chicago.6

Chicago 2018

These maps show that loans to low-income white communities are typically characterized by lower interest margins than loans to low-income black/latinx communities. Low-income minority communities cannot access the same type of credit that low-income white communities can.

This appears to happen at a highly localized level. The relationship is less clear at a national level.

The HMDA data also allows us to create statistical models. We therefore additionally ran a multivariate ordinary least-squares (OLS) regression model on the HMDA data examining loan, borrower, and area characteristics across each individual mortgage. We controlled for a number of crucial variables such as: property characteristics, loan-to-value ratio, race, gender, age, debt-to-income ratio and minority population share.

We then split the data into four categories: all lenders, all conventional lenders, top six conventional lenders, and fintech lenders.7 We performed our regression model on each of these groups. Controlling for the above characteristics, we identified 7-11 basis points (bps) of discrimination, depending on the lender type. Fintech lenders were the least discriminatory, giving black/latinx borrowers loans with 7 bps higher interest rate margins. All conventional lenders saw the largest level of discrimination, with 11 bps higher rate margins for black/latinx borrowers. These figures are statistically significant to the 99% level, indicating we are largely certain this is not due to chance variation.

The HMDA data shows that fintech lenders that purely use algorithms, not loan officers, still discriminate against minorities, albeit to a lesser extent than conventional lenders. This is consistent with our theory that discrimination is the result of a mix of algorithmic and discretionary discrimination.

It is also consistent with results presented by researchers at UC Berkeley. Their research showed that discrimination was prevalent even in algorithmic fintech lending, with lenders charging “otherwise-equivalent Latinx/African-American borrowers 7.9 bps higher rates for purchase mortgages.”8 While we cannot directly control for credit score, as they did, this does not fundamentally alter the results. They identified 5 bps of discrimination by fintech lenders, while we identified 7 bps, suggesting that 2 bps of difference can be explained by credit scores.

We believe this algorithmic discrimination will be present across most large lenders, not just fintech. Focusing on fintech lenders allows us to disentangle the effect of discretion and algorithms. However, algorithms are pervasively used in mortgage lending, even if some lenders additionally rely on loan officer discretion.

While new attention on algorithms might allow a resurgence of Fair Lending claims, there are still substantial hurdles.

Due to Walmart Stores, Inc. v. Dukes, Fair Lending class actions have been few and far between for the past decade. A new focus on algorithms could provide a path forward that can establish commonality and empower private litigants to bring suit.

However, key challenges remain. For hybrid lenders using both algorithms and loan officers, disentangling the effect of their algorithms from their officers would be complex. Moreover, mortgage lending algorithms are highly secretive, preventing us from fully understanding why and how they produce a disparate impact on minorities.

Fideres believes that by using centrally defined algorithms to make decisions, Fintech lenders have reopened the door to mass class action Fair Lending claims. While these cases remain untested, it is simply a matter of time until both plaintiffs and economists adapt to the new role of tech in finance.

1 Ping Cheng, Zhenguo Lin and Yingchun Liu, “Racial Discrepancy in Mortgage Interest Rates,” The Journal of Real Estate Finance and Economics, Vol. 51 (2015) pp. 101-120.

2 Emmanuel Martinez and Aaron Glantz, “How Reveal identified lending disparities in federal mortgage data,” Reveal from The Center for Investigative Reporting, February 2018, available at https://s3-us-west-2.amazonaws.com/revealnews.org/uploads/lending_disparities_whitepaper_180214.pdf

3 We found three class actions that were denied at class certification due to lack of commonality: Rodriguez v. National City Bank, No. 08-2059 (E.D. Pa.), In re Wells Fargo Residential Mortgage Lending Discrimination, No. 08-01930 (N.D. Cal.), and In re Countrywide Financial Mortgage Lending Practices Litigation, No. 08-1974 (W.D. Ky.).

4 Fintech lenders were identified in Buchak, Matvos, Piskorski, and Seru in “Fintech, Regulatory Arbitrage, and the Rise of Shadow Banks,” http://jfe.rochester.edu/Buchak_Matvos_Piskorski_Seru_app.pdf

6 Note, some parts of Chicago appear blank in these maps. To ensure proper comparison, we only mapped conventional, 30 year, owner-occupied, first lien mortgages, excluding reverse mortgages, commercial mortgages, balloon payments, and outliers in property value or rate spread. Areas that are blank exclusively had mortgages outside our comparison group.

7 We separated fintech lenders using the methodology established in Buchak, Matvos, Piskorski, and Seru.

8 Robert Bartlett, Adair Morse, Richard Stanton and Nancy Wallace, “Consumer-Lending Discrimination in the FinTech Era,” November 2019, available at https://faculty.haas.berkeley.edu/morse/research/papers/discrim.pdf

Paul joined Fideres after completing a BSc in Economic History at the London School of Economics and Political Science. At LSE, Paul was a Trustee of the Student Union and President of the Music Society, having previously performed with two national choirs in the US. At Fideres, Paul leads our discrimination practice, analysing cases of algorithmic and indirect discrimination, in addition to his work in the Competition team, where he is a project manager. On the Competition team, Paul’s work spans financial markets, with his research on bond market manipulation published by the ABA, digital markets, and industrial organisation.

Fideres investigates competition issues in academic journal markets.

Fideres outlines two methodological frameworks to estimate damages from pay-to-delay cases involving biosimilars.

FedEx and UPS general rate increases: Collusion or conscious parallelism? Fideres investigates competition issues in the United States courier mark...

London: +44 20 3397 5160

New York: +1 646 992 8510

Rome: +39 06 8587 0405

Frankfurt: +49 61 7491 63000

Johannesburg: +27 11 568 9611

Madrid: +34 919 494 330